Hexapod Robot(2018)

Introduction

The hexapod robot uses a master-slave control system with a high-performance MCU acting as the master who schedules the resources of each slave chip and takes on the computationally intensive work. The slave processor is mainly responsible for the control of the individual motion structures, thus reducing the load of the master.

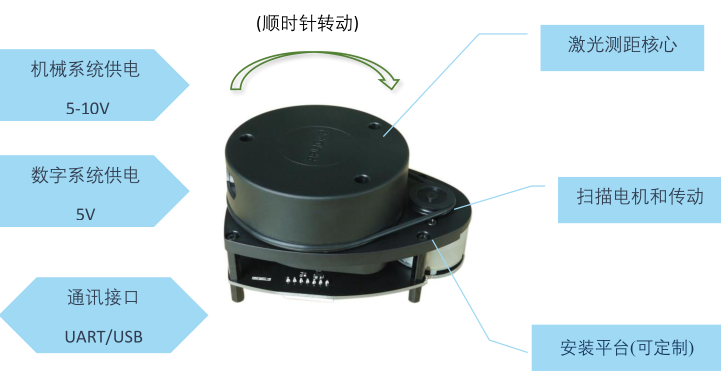

The hexapod robot aims to search and rescue problems in complex and narrow environments. Therefore, we equipped it with Lidar and camera, and try to use ToF camera to improve the efficiency and accuracy of detection.

In the 1-year project, we implement the functions as below:

- The implementation of simple walking function with multiple processors, and capability of performing the corresponding basic movements according to the instructions of the remote control.

- The implementation of obstacle avoidance with multi-modal perception(foot pressure, gyroscope, point cloud and RGB image).

- The algorithm for walking over uneven surface.

Components

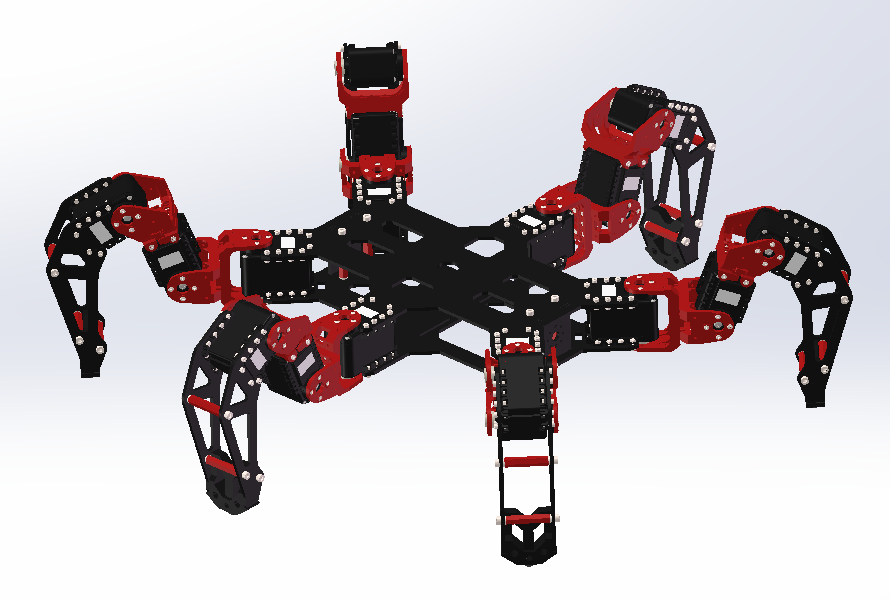

Mechanical modelling design

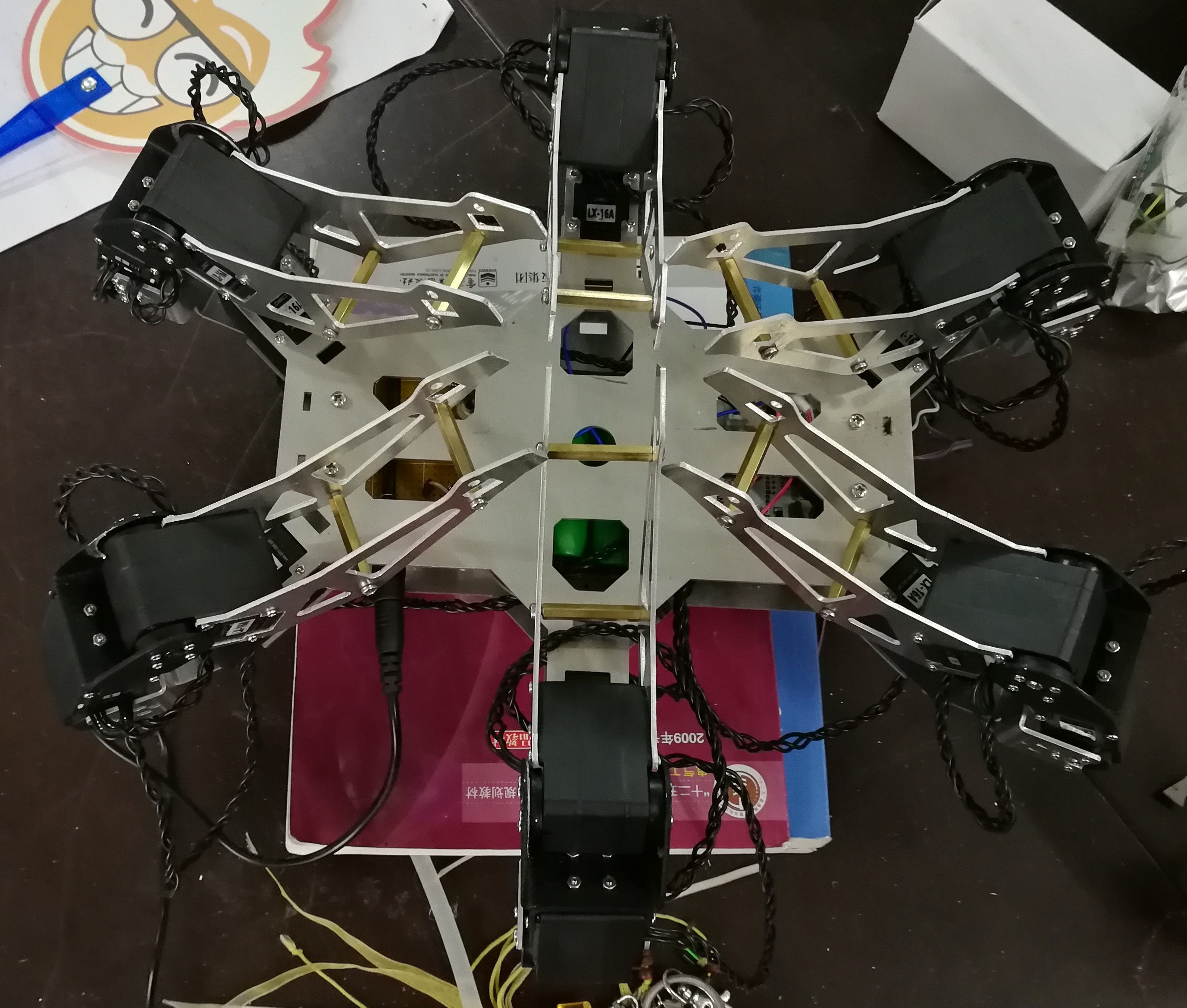

The image below is the 3D model of our hexapod robot which is rendered by Solidworks and we then ordered a set of customized aluminium alloy boards to balance rigidity and lightness.

Due to the large amount of servo motors to be used(6*3=18!), we chose to use the bus servo to reduce the labour costed on controlling each one. Howerver, we underestimated the weight of total robot and the torque of the servos cannot afford it stablly, which means the robot can only move in a slow speed(brushless motors maybe better but are difficult for us at that time).

Processors

The master processor in the project is Raspberry 3B+ while the slave one is STM32 MCU. The reason using the former one is that it is the most popular embedded PC with good computational power and the largest community so that we can get much infomation and materials about it. The later one is the most common type of MCU we used in the electrical design competition, so we use it.

The Raspberry is used to process the RGB, point cloud or depth data while the STM32 work with pressure sensors and need to parse the command from master and send specfic control message by Socket. In order to coordinate the different parts of hardware, we installed ROS kinetic on Raspberry and created interface for STM32 part based on ros control as motion controller.

Sensors

The sensors shown below were used in the project(the ToF camera was only tried).

Algorithms

To be updated.

Reward

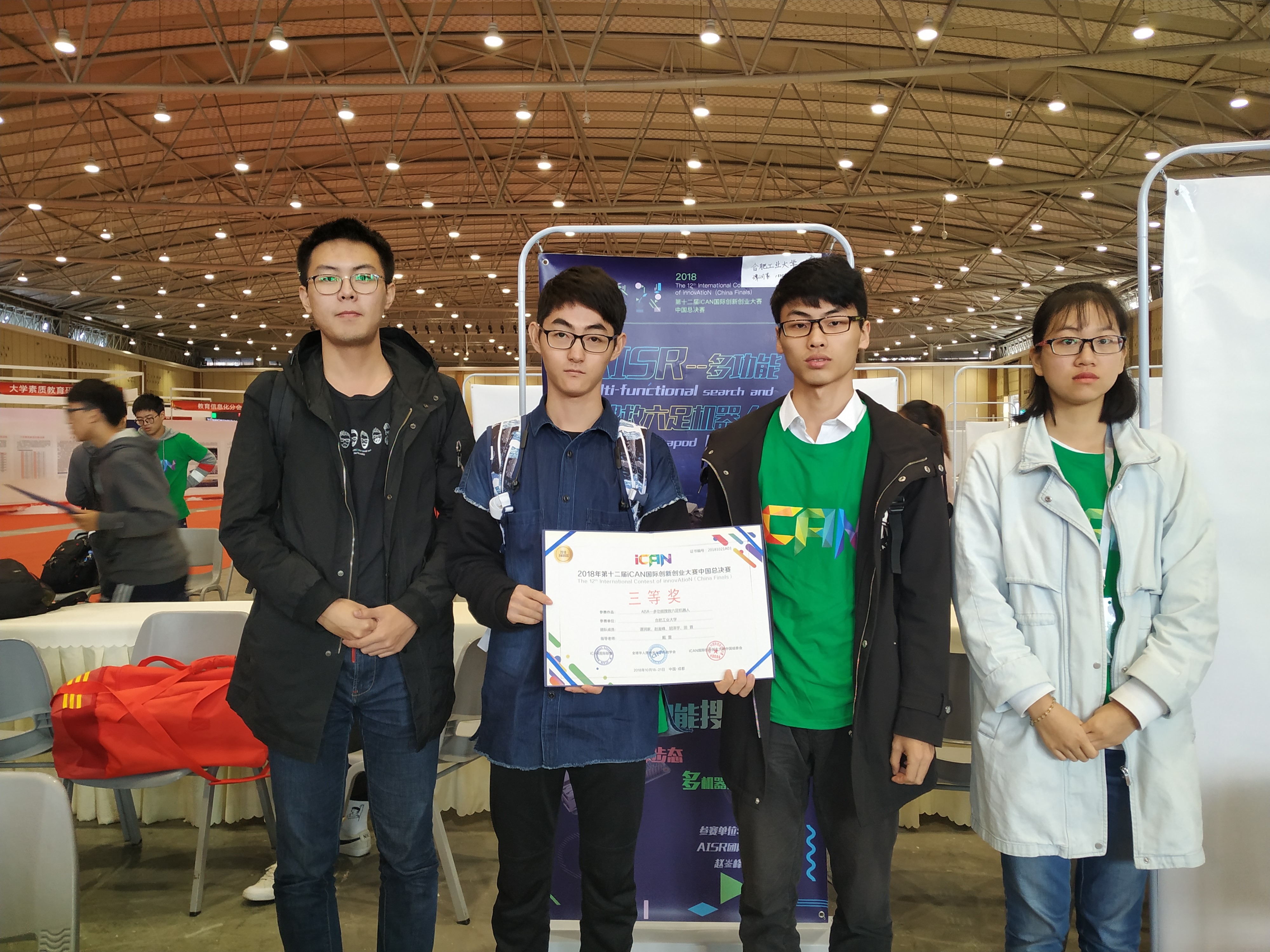

- Provincial Innovation and Entrepreneurship Training Program for College Students: Excellent

- Third Prize of the 12th iCAN International Contest of innovAtioN, 2018

Thanks for my team members! :)